Risk assessments in 2026

Continued from thoughts-on-cyber-risk

NIST is The National Institute of Standards and Technology, and they provide in depth documentation on a wide range of topics. For our purposes we are focused on the 800 series which NIST describes as"Publications in NIST’s Special Publication (SP) 800 series present information of interest to the computer security community. The series comprises guidelines, recommendations, technical specifications, and annual reports of NIST’s cybersecurity activities."

Digging into the list we see a number of documents that have multiple revisions and new documents created as recently as 2025. We are most interested in the Risk Assessments document to help us capture our risk measurements.

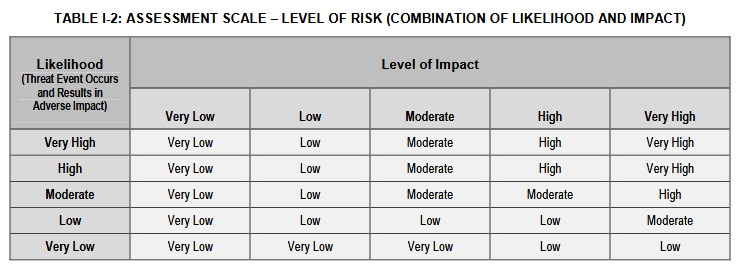

We can see it hasn't been updated in over 10 years, and digging into the document it provides some useful considerations for someone thinking about threats in their environment. Where it completely falls apart is in how to represent this. From figure I-2

If however, your target audience is unfamiliar with security, or even possibly hostile to increased investment then this model brings nothing to the table. Questions that get asked when adding a new critical risk "There are already 4 critical items and nothing happened, why does it matter if their is a fifth?" and "What is the point of SIEM, how does it support the business, who is using it" are tougher to answer. Now, I think many security folks will say "That's part of the job, you have to be able to paint a picture to executives and lead without authority etc." I agree with the statement that working with other leaders is part of the job, but relying on individual sales skills and domain knowledge of the audience is a weak position to operate from.

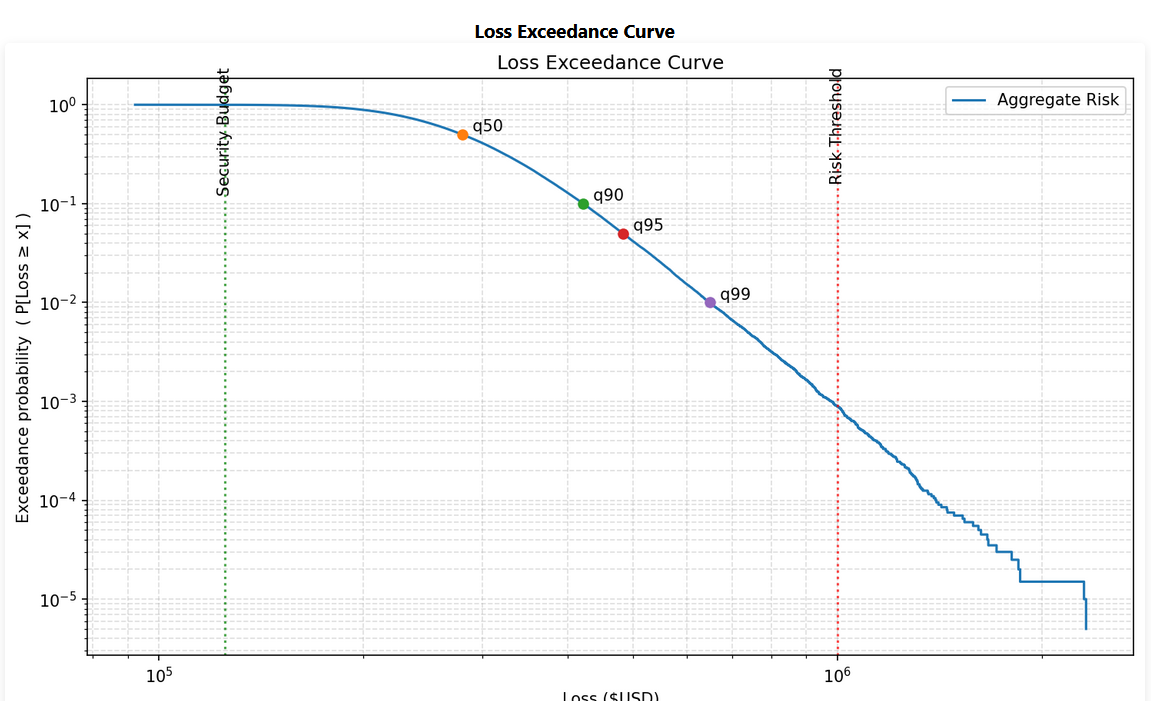

The need to move from qualitative to quantitative has been recognized by other folks. The FAIR institute is the current market leader pushing adoption in this space. I think their quantification at the individual scenario is missing the boat on this. The goal of all this quantification is to get to a place where security can go to a different executive and say "We have exceeded our accepted risk level by buying this tool, doing this M&A, refusing to address tech debt. We need to either adjust what is the accepted risk, or work to start closing out gaps". This led me to creating a quick loss estimator tool.

The purpose of this tool is to show what is being spent on security (security budget line), and what is accepted within the organization (risk threshold). The idea here is that as we spent more money on security our risks would go down (As IBM: Cost of a data breach 2025 demonstrated). This agrees with a mental model where, if we spend no money on security, then if a security event happens we have to pull other resources to handle it, where as if we are completely over provisioned on security (have an in house security crisis PR team sitting waiting to respond, in house privacy counsel waiting on hand, dedicated tooling and engineers etc.) then if something bad happens we would expect the damage to be very very well contained. Striking that balances requires board level buy in, and a negotiation on a specific agreed number that represents the risk appetite or risk credit card, and as new risks are found, need to start paying down the debt.

That is how I think it should work anyway.

My personal goal in all of this is arming security teams and professionals with ways to show the real value they provide, in dollar signs, because they do have an impact, and it can be measured.